Is AI a demon or what

By Mark Hurst • April 14, 2023

Reactions to ChatGPT and “generative AI” are in full flower right now, as the shock of the new turns into grasping for understanding: what is this thing? What will it become, and what will it do to us?

Paul Kingsnorth (a past Techtonic guest) weighed in this week with an essay called The Universal, in which he suggests that supernatural forces may be at work. Maybe (he argues) AI is a demon, and the AI engineers are magicians unwittingly conjuring a rough beast. Soon the thing will bestride the globe, embodied by all the circuits and silicon and undersea cables, and will eat us all.

I like Paul a lot but I think he’s gone too far. There are certainly demonic aspects of today’s AI engines, especially in the business models of the companies that own them, but it’s hard to conclude that they’re all combining into some kind of dark leviathan. Disappointingly, Paul points to Tristan Harris and the Center for Humane Technology, a Silicon Valley-based group making sensational claims about tech that often distract from the real issues. Harris and his co-founder Aza Raskin recently teamed up with Yuval Harari, the Sapiens author, to write one of the more ridiculous op-eds I’ve seen in awhile. From NYT Opinion on March 24, they write about AI:

We have summoned an alien intelligence. We don’t know much about it, except that it is extremely powerful and offers us bedazzling gifts but could also hack the foundations of our civilization.

Setting aside the bro-speak – can we please stop “hacking” everything – the claim is familiar to anyone who has followed these guys. They’re always saying, essentially, “Digital technology is so amazing and so powerful that we’re all in grave danger, and the only people who can save us are the billionaires running the Big Tech companies – please, Big Tech, save us!” It’s exactly what the oligarchs like to hear: their product is the most powerful thing in the world, and they should be seen as heroes for preventing the machine from eating us.

Get Mark Hurst’s weekly writings in email: Subscribe. (Or join the CG Forum.)

Sign up for this newsletter.

Also promoting this tedious idea is Nick Bostrom, the Oxford philosopher and author of Superintelligence. He’s been issuing spectacular warnings for years now – calculating the odds that AI will extinguish all human life, that sort of thing. One outcome he’s really been hoping for, though, is for AI to turn sentient. Claiming the world is about to end because of a giant spreadsheet, which has created a clever autocomplete function, is not very persuasive. Saying the thing is alive, though, that gets attention.

The problem is, no one has seen any sentience. Even with all of the (admittedly impressive) advances in ChatGPT, it remains, as I described it, a Play-Doh extruder. Squishing out glops of text is a neat hack, but it ain’t alive, any more than an expense report in Excel is alive. (What a creature that would be.)

But Bostrom needs that sentience, baby, so he went to the New York Times this week to sell a new idea: maybe AI is . . . kinda alive? “I have the view that sentience is a matter of degree,” Bostrom said with (I assume) a straight face. The argument goes like this: let’s grant that a spreadsheet is an inert array of electrical impulses and silicon. But now, what if it’s an unthinkably large spreadsheet requiring thousands of processors and a tremendous carbon footprint, maybe then we can say it’s just a teeny bit sentient? Please?

We can laugh about this dumb idea, but it has some chilling implications, one of which Bostrom floats in the interview. If machines are sentient, we should start treating them with more respect. Bostrom actually said these words:

If an A.I. showed signs of sentience, it plausibly would have some degree of moral status. This means there would be certain ways of treating it that would be wrong, just as it would be wrong to kick a dog or for medical researchers to perform surgery on a mouse without anesthetizing it.

This is to say: Don’t kick a robot dog, and watch your language when you talk to Alexa. These are sentient beings that should be met with proper behavior. As stupid as this idea is, I want to emphasize what a gift it is for the Big Tech oligarchs. It’s hard enough to mount resistance to their crap today, but wait until laws get passed saying “don’t you dare harm the surveillance system, because it has feelings.” All in service of protecting the profits of the tech monopolies.

Get Mark Hurst’s weekly writings in email: Subscribe. (Or join the CG Forum.)

Sign up for this newsletter.

By the way, “don’t kick a robot dog” is not a joke. New York City just bought two “Digidog” robots for the police department, at a cost of $750,000. During the press conference announcing the thing, the mayor gave an incoherent explanation about why he bought the robots, something about scouring the world for the best technology. Left unexplained was why nearly a million dollars couldn’t have been deployed in some other, less ominous way to actually get human police to do their human jobs. But not to worry, the city super-duper promised never to attach a weapon to the Digidog! I’m sure the precedent of the mobile police robot will definitely stop at this stage, and will never cross that easily-crossed line!

But the inevitable arming of robot dogs isn’t the worst of it. Just wait until Digidogs are declared “semi-sentient” and accorded legal protection on par with human police officers. No doubt Bostrom, Harris, and Harari will be on hand to educate us all on the enlightened ways of acting appropriately in the presence of heavily armed and legally protected robot friends.

So – is it a demon or what? A much better answer comes from Adam Conover, in a video called A.I. is B.S. (warning: YouTube link). Apart from the NSFW language, this is more or less what I’ve tried to say on Techtonic and in this newsletter over the past several months. For example, my recent column AI plus whatever argues that the problem isn’t that AI is a demon, it’s that the companies in charge of it are deceptive, exploitative, and unaccountable monopolies.

It turns out that Paul Kingsnorth is right that there’s a demon in our midst. It’s just not the thing that Tristan Harris and Yuval Harari keep harping on. Instead of a sentient “alien intelligence,” what we’re facing is an evil that arose well before ChatGPT. It’s the concentration of power and wealth, the growth-at-any-cost ethic, the rationalizing of all sorts of predation and violence, even genocide. AI systems are just an outgrowth of a more ambient, insidious threat that is enmeshed and entangled in our daily lives. If we really want to slay the demon, we should do something about Big Tech.

- - -

Further links

• Clear, concise explanation of ChatGPT by Dwayne Monroe on Doug Henwood’s show.

• On the “AI pause” open letter, criti-hype, and Nick Bostrom, by Tante.

• Forget AI dystopias, writes Cory Doctorow – what we need is “a privacy law, ad-tech breakups, app-store competition and end-to-end delivery [to] shatter the power of Big Tech and shift power to users, creative workers and media companies.” Read his excellent April 8 post (and if you missed it, listen to my interview with Cory, linked in AI plus whatever).

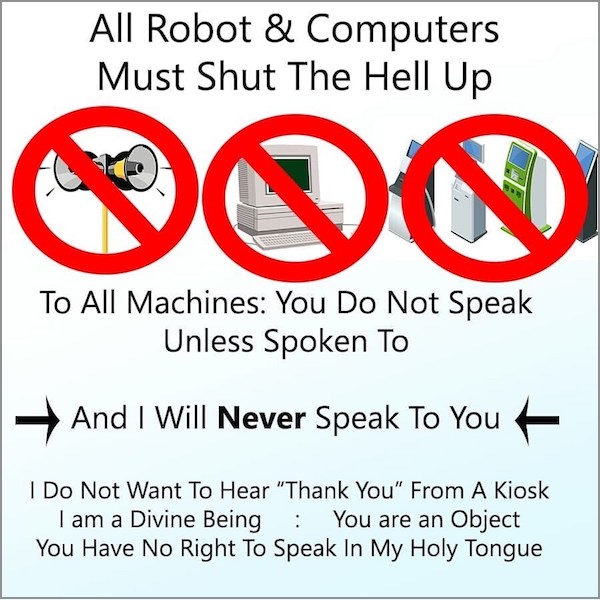

• Finally, I know I included the meme below in last month’s ChatGPT’s dangers are starting to show, but it bears repeating:

Until next time,

-mark

Get Mark Hurst’s weekly writings in email: Subscribe. (Or join the CG Forum.)

Sign up for this newsletter.

Mark Hurst, founder, Creative Good – see official announcement and join as a member

Email: mark@creativegood.com

Read my non-toxic tech reviews at Good Reports

Listen to my podcast/radio show: techtonic.fm

Subscribe to my email newsletter

Sign up for my to-do list with privacy built in, Good Todo

On Mastodon: @markhurst@mastodon.social

- – -