Is your phone listening to you? A response to the "toothpaste" thread

By Mark Hurst • May 27, 2021

First, about last week...

Be careful about writing anything negative about Apple. I've heard it said before, but this past week I experienced it.

Last week's column, Apple is rotting, drew a surprisingly negative response: a wave of newsletter unsubscribes, as well as emails and social media comments: "hyperbolic clickbait," "conspiracy theory," and the inevitable "aaaaactually" guys. (Apparently I need to brush up on computational photography before I'm qualified to question what Apple is doing.)

On the other hand, a number of encouraging notes also came in, and some new subscribers as well (welcome, if you're among them!). But I found the negative feedback instructive because it mostly boiled down to one idea: "But I like this feature." The column, you may remember, focused on Apple's Live Photos, a default setting on iPhones that captures a 3-second video, with sound, every time you think you're just taking a photo.

My point wasn't to criticize anyone who likes the feature, but rather to examine Apple's strangely horrible UI design - it's very difficult to turn off Live Photos - and to show how Apple's business model is perfectly aligned with keeping the feature activated. In other words, Apple is showing all the signs of the user-exploitative design that Facebook, Google, and Amazon have been practicing for years. I wrote back in January, in Why I'm losing faith in UX, that UX now often stands for "user exploitation." Apple's Live Photos fits the pattern perfectly.

The "toothpaste" thread

With "Apple is rotting" freshly published, I was interested to see a somewhat related message go hugely viral on Twitter. On May 24, Robert G. Reeve posted a thread about getting toothpaste ads after visiting his mother. The ads, for the toothpaste brand his mother uses, started appearing just after his visit. How did the surveillance gods do it? Reeves gives a clear and accessible explanation of how adtech surveillance can tell who you're with - by shared IP address and GPS proximity - after which the algorithms hammer you with ads for products that your family buys.

Reeve's thread "won Twitter," as the kids say. Over a quarter-million likes, and over a hundred thousand shares. Several people sent it along to me, too: "Have you seen this?"

I had seen the thread. Something about it bothered me. While it's good to see people gaining greater awareness of surveillance, the thread started off on the wrong foot and left a dangerous gap in its explanation. Reeves begins with the obvious question - "Is your phone listening to you?" - and then flatly declares, no. What's more, Reeves writes, anyone warning about audio surveillance is promoting a "conspiracy theory."

There's a certain condescension in that phrase. Oh, the poor benighted dear, thinking that a device might be listening in: he's fallen into a conspiracy theory, a rabbit hole, believing the Big Tech companies are doing something sinister. I guess I was a little sensitive, having just been called a conspiracy theorist for my Apple column last week. So I decided to say something.

I went onto Twitter yesterday and banged out my response in real time (here's my original Twitter thread). While it didn't go viral like the thread it's responding to, it still brought back some good comments. If nothing else, I was happy to bring attention to other good people who are raising the alarm about Big Tech. You'll see them listed at the bottom of the thread. Here it is:

My response to the "toothpaste" thread (posted May 26, 2021)

About that "toothpaste" thread going around, explaining Big Tech surveillance based on proximity and IP address: a thread.

I appreciate the clear and simple explanation of how surveillance works in everyday life. And it's gratifying to see the thread shared widely. So - nice job, @RobertGReeve - and thank you.

However.

My only quibble (and it's just a quibble, not to take away from what overall is a very helpful thread) - is in the 2nd post in the thread, saying the surveillance is "not listening to you," that it's a "conspiracy theory," and that it's been "debunked."

Wow - a little harsh.

It's hard to prove a negative, as we know. But the sources I've seen "debunking" surveillance-via-microphone? Usually they're the surveillance companies themselves - "Heavens, no, we'd NEVER do something like that!"

Also, that Reply All episode... hm.

There's also the vaguely ridiculous "experiment" people have tried: sitting by their phone and repeating a word over and over again - something like "Tahiti, Tahiti, Tahiti" - and then checking socials to see if they got an ad for a trip to Tahiti.

As if that would work anyway.

These algorithms, whether they use the microphone or not, they're operating at scale - millions and maybe billions of data points, interactions, people, posts. You're not gonna reverse-engineer the algorithm by repeating a word 5 times to one surveillance-device phone. C'mon.

In contrast, I'd like to share something from one of the leading Big Tech surveillance companies: Amazon.

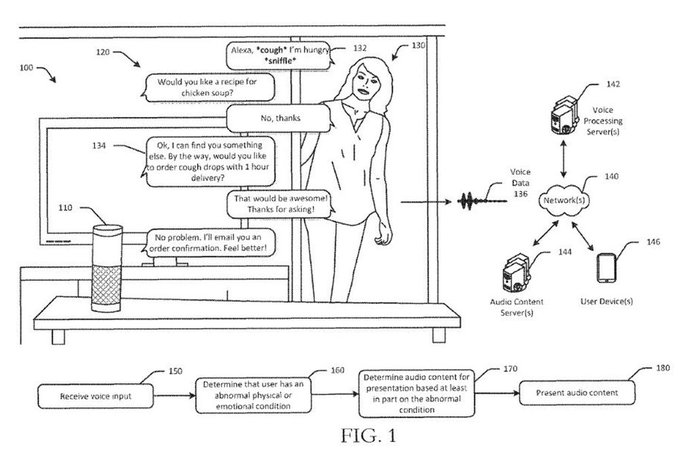

Here's an image from an Amazon patent application, reported by Bloomberg in May 2019. Look carefully at the friendly Alexa cartoon:

The flowchart spells it out: "Receive voice input", "Determine that the user has [some sort of] condition", and then take actions based on that surveillance to sell product... or whatever else happens in the "Voice Processing Server(s)".

This is a mobile device, from a Big Tech company, using the microphone to listen in ways that aren't transparent to the user. In other words, it's not just listening for command words: it's listening for ANYTHING that will help sell product or advance the business model.

Now every time an outrageous patent application gets publicized like this, the Big Tech company hastens to get their PR out front: "Oh, {nervous chuckle}, those patent applications are just for FUN. We don't mean them SERIOUSLY. It's just a silly idea we'd NEVER pursue."

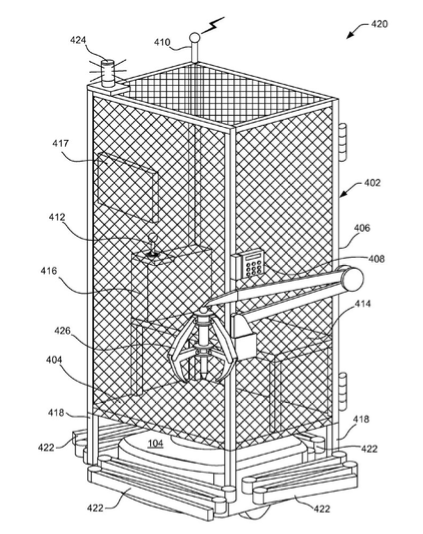

Like the time Amazon filed a patent application for a cage to put a human worker in. I'm not making this up: the image below is from an Amazon patent application for a caged human employee, so they wouldn't get in the way of the robots.

And in fact Amazon got the patent. See the Seattle Times story from 2018. Not to worry, though: Amazon said it had "no plans" to actually install a cage for a human. (Probably said with a nervous chuckle.)

My point here is that INTENT MATTERS. And the BUSINESS MODEL REALLY MATTERS.

And for surveillance-via-microphone, Big Tech companies have shown both the INTENT and the BUSINESS MODEL for audio surveillance to be profitable.

Can we really call this "debunked"?

I'll grant that Facebook, Google, Amazon, Apple, and Microsoft **might** not be **currently** using audio stolen from users' microphones to feed into their toxic stew of adtech/surveillance/manipulation/control algorithms.

*Might* not. For now.

But one thing I've learned over the years is that TRAJECTORY IS REALLY, REALLY IMPORTANT. Just look at the arc of these companies over the past 10 years.

With the hardware, algorithms, & business model in place, and a complete lack of ethics - you think they wouldn't listen in?

What's more, the companies are grabbing audio even when it's not needed.

Great example: Apple's Live Photos, which I wrote about a few days ago (Apple is rotting. A look at Live Photos).

Tell me again - why does Apple need to record **3 SECONDS OF AUDIO** just so someone can take a PHOTO?

My point is that Apple might have some motivation (if not today, then soon) to habituate us to recording audio when it's not necessary.

Much like Amazon stated, explicitly, their idea to do the same thing.

Again, look at the TRAJECTORY of the system.

So I really bristle at the suggestion - as the "toothpaste" thread did - that this idea is some sort of conspiracy theory that's been "debunked again and again." That sort of messaging does us all a disservice.

Proximity/IP surveillance is real, but audio is a real threat, too.

While I'm at it, just one more quibble (and again, no disrespect to the thread author). But he suggests that "we just give away the data and nobody cares." As though everyone is fine with the surveillance-manipulation system we're subject to.

Sorry, no.

Many people use horrible Big Tech services because there are no alternatives (because the monopoly tech cos stamped out all competition).

Using this sludge is increasingly a de facto requirement for employment, education, citizenship, you name it.

And that's wrong.

So we don't give up our data because "we don't care," because somehow we've decided that we love Google so much that we can excuse it, or because we've decided, you know what, maybe Facebook is actually a decent company.

To the contrary.

Many people hate the services but use them anyway - because they have no choice.

And many, MANY people simply don't know what these services are doing - because their media diet is filtered and dominated by these exact same Big Tech companies.

The good news is that there's a team on the field that is organizing a resistance: doing research, discovering just how awful these companies are, and writing and speaking out about their (growing) exploitation and abuse.

If you're not already, follow these good people. (This isn't an exhaustive list, truly I'm just trying to get this thread out quickly-) @hypervisible @UpFromTheCracks @shannonmattern @LMSacasas @ubiquity75 @safiyanoble @STOPSpyingNY @FoxCahn @FrankPasquale @themarkup @swodinsky

As for me, I'm just one person trying to speak out - as well as amplify the voices of other people on the team who are doing such important work.

If you're interested in my work:

• My email newsletter: Sign up here.

• My radio show & podcast: techtonic.fm

P.S. More good people to follow. Still not an exhaustive list, but these are important voices - all featured on Techtonic - in helping resist Big Tech: @_jack_poulson @sivavaid @ireneista @adriandaub @lizjosullivan @bentarnoff @moiragweigel @jathansadowski

Update June 5, 2021: Is My Phone Listening in? On the Feasibility and Detectability of Mobile Eavesdropping (paper by Jacob Leon Kröger and Philip Raschke, June 11, 2019).

Comment on this column: Creative Good members, post a comment on the Creative Good Forum. If you're not a Creative Good member, read the official announcement and join us!

Until next time,

-mark

Mark Hurst, founder, Creative Good - see official announcement and join as a member

Email: mark@creativegood.com

Read my non-toxic tech reviews at Good Reports (see the new entry on Removing your info from data brokers)

Listen to my podcast/radio show: techtonic.fm

Subscribe to my email newsletter

Sign up for my to-do list with privacy built in, Good Todo

Twitter: @markhurst

- - -